Photo from wikipedia

The rapid growth of the Internet promotes the growth of textual data, and people get the information they need from the amount of textual data to solve problems. The textual… Click to show full abstract

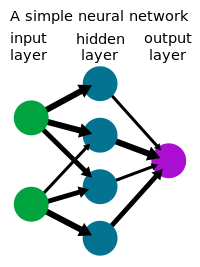

The rapid growth of the Internet promotes the growth of textual data, and people get the information they need from the amount of textual data to solve problems. The textual data may include some potential information like the opinions of the crowd, the opinions of the product, or some market-relevant information. However, some problems that point to “How to get features from the text” must be solved. The model of extracting the text features by using the neural network method is called neural network language model. The features are based on n -gram Model concept, which are the co-occurrence relationship between the vocabularies. The word vectors are important because the sentence vectors or the document vectors still have to understand the relationship between the words, and based on this, this study discusses the word vectors. This study assumes that the words contain “the meaning in sentences” and “the position of grammar.” This study uses recurrent neural network with attention mechanism to establish a language model. This study uses Penn Treebank, WikiText-2, and NLPCC2017 text datasets. According to these datasets, the proposed models provide the better performance by the perplexity.

Journal Title: Neural Computing and Applications

Year Published: 2019

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!