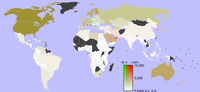

Photo from wikipedia

We introduce a reinforcement learning algorithm inspired by the combinatorial multi-armed bandit problem to minimize the time-averaged energy cost at individual base stations (BSs), powered by various energy markets and… Click to show full abstract

We introduce a reinforcement learning algorithm inspired by the combinatorial multi-armed bandit problem to minimize the time-averaged energy cost at individual base stations (BSs), powered by various energy markets and local renewable energy sources, over a finite-time horizon. The algorithm sustains traffic demands by enabling sparse beamforming to schedule dynamic user-to-BS allocation and proactive energy provisioning at BSs to make ahead-of-time price-aware energy management decisions. Simulation results indicate a superior performance of the proposed algorithm in reducing the overall energy cost, as compared with recently proposed cooperative energy management designs.

Journal Title: IEEE Communications Letters

Year Published: 2017

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!