Photo from wikipedia

This paper presents an unsupervised multichannel method that can separate moving sound sources based on an amortized variational inference (AVI) of joint separation and localization. A recently proposed blind source… Click to show full abstract

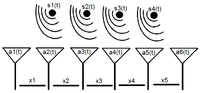

This paper presents an unsupervised multichannel method that can separate moving sound sources based on an amortized variational inference (AVI) of joint separation and localization. A recently proposed blind source separation (BSS) method called neural full-rank spatial covariance analysis (FCA) trains a neural separation model based on a nonlinear generative model of multichannel mixtures and can precisely separate unseen mixture signals. This method, however, assumes that the sound sources hardly move, and thus its performance is easily degraded by the source movements. In this paper, we solve this problem by introducing time-varying spatial covariance matrices and directions of arrival of sources into the nonlinear generative model of the neural FCA. This generative model is used for training a neural network to jointly separate and localize moving sources by using only multichannel mixture signals and array geometries. The training objective is derived as a lower bound on the log-marginal posterior probability in the framework of AVI. Experimental results obtained with mixture signals of moving sources show that our method outperformed an existing joint separation and localization method and standard BSS methods.

Journal Title: IEEE Signal Processing Letters

Year Published: 2023

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!