Photo from wikipedia

Recent interests in graph neural networks (GNNs) have received increasing concerns due to their superior ability in the network embedding field. The GNNs typically follow a message passing scheme and… Click to show full abstract

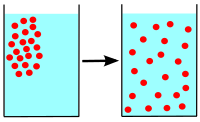

Recent interests in graph neural networks (GNNs) have received increasing concerns due to their superior ability in the network embedding field. The GNNs typically follow a message passing scheme and represent nodes by aggregating features from neighbors. However, the current aggregation methods assume that the network structure is static and define the local receptive fields under visible connections, which consequently fails to consider latent or high-order structures. Besides, the aggregation methods are known to have a depth dilemma due to the over-smoothness issues. To solve the above shortcomings, we present in this article a compact graph convolutional network framework which defines the graph receptive fields based on diffusion paths and explicitly compresses the neural networks with sparsity regularization. The proposed model seeks to learn from invisible connections and recover the latent proximity. First, we infer the high-order proximity and construct diffusion paths by diffusion samplings. Compared with random walk samplings, the diffusion samplings are based on regions instead of paths. The network inference then obtains accurate weights that can be leveraged to build small but informative receptive fields with salient neighbors. Second, to utilize the deep information while avoiding overfitting, we propose learning a lightweight model by introducing a nonconvex regularizer. Numerical comparisons with the existing network embedding methods under unsupervised feature learning and supervised classification show the effectiveness of our model.

Journal Title: IEEE transactions on cybernetics

Year Published: 2020

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!