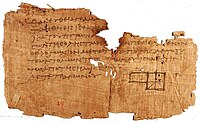

Photo from wikipedia

By using the viewpoint of modern computational algebraic geometry, we explore properties of the optimization landscapes of the deep linear neural network models. After clarifying on the various definitions of… Click to show full abstract

By using the viewpoint of modern computational algebraic geometry, we explore properties of the optimization landscapes of the deep linear neural network models. After clarifying on the various definitions of "flat" minima, we show that the geometrically flat minima, which are merely artifacts of residual continuous symmetries of the deep linear networks, can be straightforwardly removed by a generalized L_2 regularization. Then, we establish upper bounds on the number of isolated stationary points of these networks with the help of algebraic geometry. Using these upper bounds and utilizing a numerical algebraic geometry method, we find all stationary points for modest depth and matrix size. We show that in the presence of the non-zero regularization, deep linear networks indeed possess local minima which are not the global minima. We show that though the number of stationary points increases as the number of neurons (regularization parameter) increases (decreases), the number of higher index saddles are surprisingly rare.

Journal Title: IEEE transactions on pattern analysis and machine intelligence

Year Published: 2021

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!