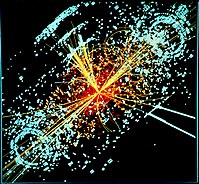

Photo from wikipedia

Neural network is a dynamical system described by two different types of degrees of freedom: fast-changing non-trainable variables (e.g., state of neurons) and slow-changing trainable variables (e.g., weights and biases).… Click to show full abstract

Neural network is a dynamical system described by two different types of degrees of freedom: fast-changing non-trainable variables (e.g., state of neurons) and slow-changing trainable variables (e.g., weights and biases). We show that the non-equilibrium dynamics of trainable variables can be described by the Madelung equations, if the number of neurons is fixed, and by the Schrodinger equation, if the learning system is capable of adjusting its own parameters such as the number of neurons, step size and mini-batch size. We argue that the Lorentz symmetries and curved space-time can emerge from the interplay between stochastic entropy production and entropy destruction due to learning. We show that the non-equilibrium dynamics of non-trainable variables can be described by the geodesic equation (in the emergent space-time) for localized states of neurons, and by the Einstein equations (with cosmological constant) for the entire network. We conclude that the quantum description of trainable variables and the gravitational description of non-trainable variables are dual in the sense that they provide alternative macroscopic descriptions of the same learning system, defined microscopically as a neural network.

Journal Title: Entropy

Year Published: 2022

Link to full text (if available)

Share on Social Media: Sign Up to like & get

recommendations!